In the dynamic world of finance, artificial intelligence offers unparalleled efficiency in managing compliance tasks.

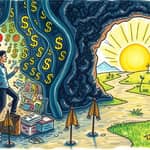

Yet, this technological advancement often comes shrouded in mystery, creating a critical trust deficit.

Explainable AI (XAI) emerges as a transformative solution, making decision-making processes transparent and understandable to humans.

This transparency is not just a technical feature; it is the bedrock of regulatory confidence and operational success.

As financial institutions navigate complex landscapes, XAI bridges the gap between innovation and accountability.

Explainable AI refers to systems that reveal the logic behind their decisions.

It works by highlighting key variables and patterns, turning data into a coherent narrative.

This approach transforms AI from an opaque tool into a reliable partner.

XAI can be categorized into inherently explainable models and post-hoc explanation techniques.

Both methods aim to demystify AI, ensuring that every decision is justifiable.

Financial institutions face a daunting challenge: they need advanced AI for scale, but it introduces transparency risks.

Black box models have sparked regulatory pushback against opaque decisions, threatening compliance efforts.

Globally, regulators emphasize that firms must explain every flagged match to control risk effectively.

This paradox highlights the urgent need for XAI to align technology with regulatory demands.

Without explainability, institutions risk losing trust and facing severe penalties.

Regulatory requirements vary across regions, but the call for transparency is universal.

In the European Union, the AI Act mandates explicit explainability for high-risk systems like AML checks.

It ensures human oversight and traceability in financial operations.

The GDPR's Right to Explanation requires clear logic for automated customer decisions.

This simplifies investigations and reduces audit burdens internally.

In the United States, the Fair Credit Reporting Act demands explainable and auditable decisions.

Federal Reserve guidelines and OCC guidance stress model risk management and transparency.

The UK's FCA focuses on proportionate, evidence-based assurance in AI systems.

International standards like SR 11-7 and FATF Recommendations underscore documentation needs.

This table illustrates the alignment in regulatory demands for explainability.

Adhering to these frameworks builds a foundation of trust with authorities.

To meet regulatory standards, institutions should focus on four cornerstone principles.

These principles enable firms to demonstrate sound, defensible compliance decisions.

They transform regulatory challenges into manageable, systematic practices.

XAI resolves critical bottlenecks in compliance operations, enhancing efficiency.

In black box systems, analysts waste hours reverse-engineering alerts without clear logic.

XAI allows compliance professionals to verify reasoning without technical expertise.

This reduces operational drag and speeds up routine reviews significantly.

For audit readiness, XAI ensures every decision is fully documented and reproducible.

Regulators seek details on alert triggers, data support, and system validation.

Explainability provides seamless documentation, enabling confident audit processes.

Model governance gaps under frameworks like SR 11-7 are addressed with clear justifications.

Institutions must show how models behave and justify decisions technically.

XAI meets these requirements by offering insights into model behavior.

Alert accuracy improves as XAI filters out noise and focuses on genuine risks.

By sharing contextual logic, compliance teams prioritize true threats effectively.

This increases speed and consistency in compliance operations across the board.

XAI finds practical applications in key areas of financial regulation.

In AML systems, every alert is based on sourced data like sanctions lists or customer profiles.

This provides complete insight into decision-making, enhancing compliance reliability.

For credit decisions, explainability boosts approval rates and customer satisfaction.

It aligns with regulatory emphasis on fairness and transparency.

These use cases illustrate how XAI transforms theoretical benefits into actionable results.

Rather than relying solely on algorithms, a partnership model combines AI with human oversight.

This approach harnesses automation's speed and consistency while preserving human judgment.

It acknowledges current XAI limitations while meeting regulatory requirements effectively.

Human oversight ensures final decisions are accountable and nuanced.

This builds trust through verified outcomes, not just algorithmic transparency.

AI integrates into human-focused workflows across the regulatory lifecycle.

This model fosters a collaborative environment where technology and expertise thrive together.

Despite advancements, significant gaps persist in AI risk management.

Statistics show that 47% of organizations have an AI risk framework, but 70% lack ongoing monitoring.

This misalignment can lead to non-compliance and severe repercussions.

The EU AI Act categorizes AI systems by risk levels, each with specific obligations.

High-risk systems require stringent documentation and transparency protocols.

Assessing risk levels and meeting corresponding requirements remains a challenge.

These statistics underscore the need for robust XAI implementations to close gaps.

Failing to comply with AI regulations carries severe consequences.

Opaque AI models risk non-compliance with global regulations, exposing firms to enforcement actions.

Misidentifying sanctioned entities leads to fines and potential harm to trust.

This highlights why explainability is not optional but essential for survival.

Organizations need AI compliance when using AI for hiring, lending, or risk scoring.

It applies when processing personal data or operating under GDPR or similar regulations.

Integrating third-party AI or generative systems also necessitates compliance measures.

Key practices include conducting Data Protection Impact Assessments and validating model performance.

Logging decisions for auditability and applying cybersecurity safeguards are crucial.

Documenting assumptions and limitations ensures transparency from development to deployment.

Technology solutions like modern AI compliance platforms automate governance.

Organizations can tailor risk thresholds and alert sensitivity to their policies.

This flexibility allows institutions to meet specific regulatory obligations with clear documentation.

Regulators expect this level of customization and justification in compliance systems.

Explainable AI is more than a technological upgrade; it is a strategic imperative for building trust.

By making AI transparent, institutions can navigate regulatory complexities with confidence.

This fosters a culture of accountability and innovation in financial compliance.

Embrace XAI to transform challenges into opportunities for growth and reliability.

Together, technology and human insight can create a future where trust is the cornerstone of every decision.

References